Serverless vs. Containers: When to Choose (And Why It Matters for Delivery)

Contents

Contents

Choosing a cloud architecture directly impacts your delivery speed, scalability, costs, and risk management. The infrastructure model you adopt will shape how quickly you can ship features, how flexibly you can scale to demand, and how much operational overhead your team carries. In other words, architecture is a strategic choice that can accelerate or bottleneck your product delivery.

Both serverless containers and traditional container-based architectures are modern, cloud-native approaches to building applications. They each promise improved agility and scale compared to traditional on-premises servers. However, they align with different products and team realities.

So, what is serverless architecture? In this article, we’ll break it down, dive into how it compares to containers, and when each approach makes the most sense for your business. We’ll also address common comparisons, such as AWS Lambda vs. containers, and explain why framing serverless vs. microservices as a direct trade-off can be misleading.

Serverless and Containers: Understanding the Two Models

Modern cloud architecture solutions generally fall into two broad models: containerization and serverless. Before comparing the models, let’s define each one in plain terms.

What Are Containers?

Containers are a technology to bundle an application and all its requirements, code, libraries, and configuration into a small, compact unit. Unlike entire virtual machines, these containers do not share the host system’s operating system kernel and are therefore fast and compact to boot up. In a container-based system, your software executes inside these containers, which can run consistently on any environment. What this portability therefore means, of course, is that you can ‘build once, run anywhere,’ and be consistent from development to production.

What Is Serverless Architecture?

Serverless computing is a cloud execution model that allows you to run your code without managing any servers. That sounds almost magical – so what does serverless mean? In reality, servers still exist, but the cloud provider fully manages them. In a serverless architecture, you write small units of code, often called functions, in a model known as FaaS (Function as a Service). These functions are deployed to a cloud service, which automatically runs them in response to events or requests. The cloud provider dynamically allocates resources to execute the function and then stops charging when the function completes.

Serverless vs Kubernetes: Quick Comparison

If you’re weighing serverless computing vs containers, the following table provides a side-by-side comparison across key factors that affect delivery:

| Dimension | Serverless | Containers |

| Deployment Speed | Near-instant deployment without server setup (you only configure the function itself). | Fast via CI/CD after initial environment setup (registry, cluster, pipeline). |

| Scalability & Performance | Auto-scales per request; handles bursts but has cold-start delays and time limits. | Scales via orchestrator; great for steady, long-running tasks; no cold starts, but plan for burst capacity. |

| Pricing & Cost | Pay only per execution; cost-effective for low usage, but can spike with heavy traffic. | Pay for reserved resources (even if idle); efficient for high, steady loads, but wasteful at low loads; costs are more predictable with stable usage. |

| Security & Compliance | Provider patches OS/runtime; smaller security burden for you, but limited compliance customization (must trust provider’s isolation). | Full control of environment and security; can meet strict compliance, but more responsibility and risk if misconfigured. |

| Portability & Lock-In | A proprietary environment often leads to vendor lock-in; moving providers may require code changes. | Highly portable container images run anywhere (cloud or on-prem), enabling easy migration and multi-cloud setups. |

| Team Control vs Abstraction | No infrastructure management (high abstraction) – minimal DevOps services needed, developers focus on code; trade-off is less control over environment and performance tuning. | Full control of runtime, configuration, and scaling allows for deep optimization and troubleshooting, but requires significant DevOps effort and can slow development. |

| Operational Overhead & Maintenance | Minimal ops (provider handles servers, scaling, maintenance). | High ops overhead (manage container images, deployments, orchestrator maintenance). |

From a DevOps perspective, deployment pipelines are sufficiently similar between serverless functions and container-based workloads, and the primary variation involves infrastructure and runtime complexity.

Serverless can reduce the proliferation of infrastructure and save money when used for event-driven or low-frequency jobs. Still, it becomes more challenging to debug and maintain at high scale. In real-world scenarios, an application with hundreds of AWS Lambda functions and API Gateway routes, or Azure Functions with tens of individually set-up endpoints, becomes fragile, complex, and more challenging to maintain and debug. You typically must handle each trigger, integration, and environment individually, which incurs operational overhead.

Serverless services also introduce limitations regarding memory, runtime duration, and cold starts, while requiring extensive configuration of auxiliary components, such as APIs and event sources. Though AWS now supports container images in Lambda, offering some flexibility, platform constraints still apply. Fully managed options, such as AWS Amplify, reduce setup effort even further, but offer little transparency and make it difficult to decouple frontend and backend. For long-term scalability and cloud portability, containerization, typically hosted on Kubernetes or similar orchestration layers, gives teams more control. However, choosing the right model ultimately depends on the workload profile, cost sensitivity, and the level of vendor lock-in that your architecture can tolerate.

How They’re Similar And Why That Can Be Misleading

At a high level, both serverless and containers aim to help teams deliver software faster and more reliably. They share a few important similarities:

- Cloud-native DNA. Both approaches are considered cloud-native. They thrive in elastic cloud environments and support modern development practices, such as agile and CI/CD.

- Microservices and modularity. Both serverless functions and containerized apps support breaking your application into smaller, independent components (microservices). If your goal is a modular architecture with teams deploying independently, you can achieve this with either.

- Scalability. Both models can scale out horizontally to handle increased load. A well-designed container cluster or a well-architected serverless app can automatically scale instances/functions in response to demand, ensuring high performance and availability.

- Faster delivery and experimentation. Compared to procuring and managing physical servers, both serverless and containers significantly speed up the deployment process. They allow developers to ship code more frequently.

Business Use Cases: When to Choose One Over the Other

How do you decide between serverless and containers for your project? The answer often comes down to your delivery priorities, the nature of your application, and your team’s capabilities. Let’s break down scenarios where each model tends to shine.

When to Use Serverless Architecture

Choose serverless if your primary goal is speed and minimal overhead, and your application or workload fits certain patterns. Serverless is ideal when:

- Rapid prototyping and MVPs. If you need to get a product or feature to market quickly, serverless lets you write your functionality and deploy immediately without spending time on infrastructure.

- Event-driven and infrequent workloads. Applications that respond to events or have sporadic traffic are perfect for serverless. For example, an IoT sensor that sends data occasionally can trigger a function to process it. You might not justify running a server 24/7 for such intermittent tasks. So, how does serverless computing work in this case? If no events occur, you pay nothing, and if a burst of events comes, the platform scales out seamlessly.

- Spiky or unpredictable traffic. If your usage pattern has spikes, serverless can handle the surge automatically. You don’t have to pre-provision for peak load. This provides resilience and potentially cost savings, as you avoid paying for large servers that sit idle most of the time.

- No DevOps crew. If you’re relying primarily on developers and want to minimize the DevOps burden, serverless is attractive. The cloud provider handles uptime, scaling, and infrastructure security, so your team can focus on application code.

When to Use Containers

Container-based architecture is the choice when you need more control or your application doesn’t cleanly fit the serverless mold. Consider leaning toward containers in cases such as:

- Legacy applications. Legacy applications that you’re lifting and shifting to the cloud can often be moved into containers with minimal code changes, whereas converting them to serverless would require a complete re-architecting.

- Heavy compliance or security requirements. Organizations in finance, healthcare, or other regulated industries typically have strict compliance standards. You may need your servers in a specific region, have to run certain security monitoring agents on the host, or undergo certifications that require control over the environment.

- Predictable high load. If your application has a fairly steady and high usage 24/7, running it on containers may be more cost-effective. For example, an API that consistently gets, say, 100 requests per second around the clock might be cheaper to operate on a couple of optimized container instances than as serverless functions. With containers, you can also use reserved instances or savings plans from cloud providers to reduce cost.

- Multi-cloud or cloud-agnostic strategy. Orchestration systems, such as Kubernetes, operate in nearly all cloud and on-prem environments, offering a standard layer. You can relocate workloads by redeploying your container images elsewhere with minimal refactoring. In the serverless future, every provider’s FaaS maintains proprietary hooks. Thus, for organizations pursuing strong cloud architecture strategies and focusing on portability, containerization naturally falls into place.

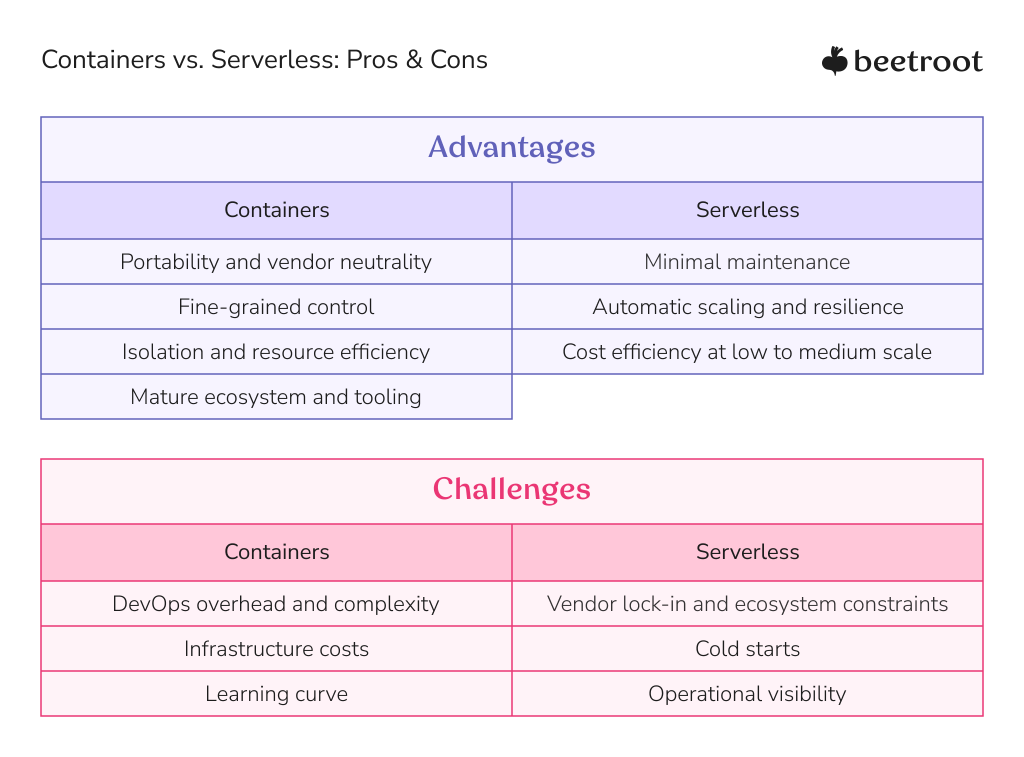

Pros and Cons of Each Approach

We’ve touched on some advantages and drawbacks in general terms. Let’s summarize the key pros and cons of serverless architecture vs. containers from a delivery perspective:

Advantages of Containers

- Portability and vendor neutrality. Containers can run anywhere – on any cloud or on-premises, so you aren’t locked into a single vendor’s ecosystem. This portability simplifies migration and enables multi-cloud strategies.

- Fine-grained control. You have control over the entire stack. This means you can optimize performance, install custom dependencies, and configure security policies to your exact needs. For complex applications, this control is invaluable in achieving reliability and efficiency.

- Isolation and resource efficiency. Containers provide strong isolation; each container’s problems typically don’t affect others on the same host. They are also lightweight, enabling high density without the overhead of complete virtual machines. This can lead to better resource utilization when configured correctly.

- Mature ecosystem and tooling. The container ecosystem is very rich. There are established tools for logging, monitoring, continuous deployment, and security scanning of container images. The community and support around containers is extensive, which means solutions to common problems are often a Google search away.

Challenges of Containers

- DevOps overhead and complexity. Running containerized infrastructure requires significant know-how. You need to manage a cluster, set up CI/CD pipelines for container builds, and handle networking and service discovery between containers. Teams often underestimate the operational effort; tools like Kubernetes are powerful but notoriously complex for newcomers.

- Infrastructure costs. A container-based configuration, unless adequately optimized, may result in the underutilization of compute resources, leading to payment for unused resources. For instance, you may operate a Kubernetes cluster with nodes running constantly to support the peak traffic, but the off-peak nodes are partially idle, and the whole cost is incurred anyway.

- Learning curve. Adopting containerization has an initial learning curve for teams. Developers need to learn how to containerize applications, and ops teams need to learn orchestration platforms. This learning investment can temporarily slow down development as everyone adapts to new workflows.

Advantages of Serverless

- Minimal maintenance. The hallmark of serverless is that you don’t manage servers. This dramatically lowers the maintenance burden. Your team can focus almost entirely on writing code that delivers business value.

- Automatic scaling and resilience. Serverless functions inherently autoscale. If your application suddenly gets 100x traffic, the platform seamlessly adds capacity. Each function invocation is isolated, so one slow or erroring invocation doesn’t directly stall others.

- Cost efficiency at low to medium scale. Because of the pay-per-use model, serverless can be extremely cost-effective for certain workloads. If you have a service that’s only active a few times per day or has long idle periods, you literally pay $0 during the idle times. Even for regularly used apps, moderate usage can result in lower costs than maintaining even a small server 24/7.

Challenges of Serverless

- Vendor lock-in and ecosystem constraints. Going serverless often means committing to a specific cloud provider’s ecosystem. Each provider has its own function interface, event formats, and complementary services. While there are frameworks to abstract this (e.g., Serverless Framework), in reality, you end up using provider-specific features that make migrating off difficult.

- Cold starts. Cold starts can introduce latency for user-facing functions. If your app has infrequent endpoints, the user may experience a delay of a few seconds the first time it’s used in a while, which could be unacceptable in some scenarios.

- Operational visibility. While serverless reduces infrastructure ops work, it doesn’t eliminate the need for operations monitoring — it changes it. You’ll want to monitor function execution times, memory usage, and invocation errors. The out-of-the-box metrics from cloud providers are a start, but in a complex app, you often need a tailored approach to gain visibility into how the entire system is performing.

When to Consider a Hybrid Approach

Many organizations use both containers and serverless. Adopting a hybrid architecture enables you to leverage the strengths of each approach where they are most effective. For example:

- Event-driven glue with a stable core. You might have a core application running on containers, such as an e-commerce platform. Around this core, you can utilize serverless functions for event-driven tasks that occur irregularly, such as image processing, sending emails or push notifications, generating reports on demand, and data synchronization tasks. The core systems benefit from the performance and control of containers, while the sporadic functions take advantage of serverless efficiency.

- Extension and customization. If you provide a software platform, you might run the leading platform in containers, but allow custom code or extensions to run in a serverless sandbox. This is a pattern some products use to enable user-defined functions safely. The main app remains stable in containers, and serverless provides a flexible plugin system.

- Pipeline and CI/CD tasks. DevOps teams themselves use serverless and containers in conjunction. For instance, you could run your CI/CD runners in containers, but use serverless functions for lightweight tasks, such as notifying Slack about build status or performing nightly cleanup jobs. Even in deployment pipelines, a hybrid approach can optimize cost.

- Gradual migration. Perhaps you are migrating from a monolithic architecture. You might start by containerizing the monolith to get it into a modern environment. Then, gradually carve out specific capabilities into serverless functions. Over time, you could end up with a mix: part of the system in containers, new features in serverless, perhaps eventually everything moves in one direction, but the interim state is hybrid. This “strangler pattern” can reduce risk by avoiding the need to choose a single approach for everything on day one.

The business advantages of a hybrid approach include both cost optimization and flexibility. You use serverless where it clearly saves cost, and containers where they clearly add value. You also gain some resilience: even if your container cluster needs a maintenance window, your independent serverless components might continue to operate for certain tasks.

Conclusion

There is no one-size-fits-all answer in the serverless vs containers debate. The best choice depends on your product’s specific needs, your delivery timelines, and your operational capabilities. Serverless offers unparalleled speed and simplicity for getting started, along with elastic scaling that can handle unpredictable demand. Containers offer control, consistency, and flexibility, especially for complex and long-running applications.

A hybrid approach, utilizing serverless for some components and containers for others, provides the best of both worlds. While many treat cloud native vs serverless as distinct categories, serverless is actually one implementation of cloud-native design focused on maximum abstraction. The key is not to view it as an either/or battle, but as a spectrum of tools.

Finally, remember that you don’t have to navigate this alone. As a trusted technology partner, we leverage our cross-domain experience and access to a vast network of vetted cloud and DevOps engineers to provide you with solutions tailored to your objectives and resources.

Let’s discuss the right path for your cloud delivery model. We’re here to help you build a cloud solution that accelerates delivery and grows with your business.

FAQs

What is the difference between serverless and containerization?

Serverless refers to a cloud model where you run code without managing the underlying servers. Containerization refers to packaging an application into containers that you deploy on servers or clusters. The difference lies in management: with serverless, you deploy functions and the platform auto-scales and operates them; with containers, you have more control and must manage the container runtime environment yourself.

When should I use serverless instead of containers?

Use serverless when speed of development and minimal ops overhead are top priorities, and when your workload fits certain patterns. Serverless is great for event-driven tasks, APIs with unpredictable load, or services that don’t need to run 24/7. It’s also a good choice if you want to avoid provisioning and managing servers entirely.

Are containers or serverless better for scalability?

Both can achieve massive scalability, but in different ways. Serverless has built-in scalability, so the cloud provider will automatically spin up more function instances to handle load increases, without any intervention from you. Containers can also scale very well, but typically you’ll configure an orchestrator to add more container instances or nodes based on metrics.

What are the business risks of choosing the wrong architecture?

If you opt for serverless and your application runs continuously under heavy load, you may encounter higher costs than expected or encounter service limits that impede functionality. It could also become difficult to manage hundreds of functions if your app isn’t naturally event-driven, leading to maintenance headaches and debugging challenges.

Can I use both serverless and containers in the same application?

Absolutely. Many applications benefit from a mix of both. A hybrid approach is commonly used in cloud architectures. For instance, you might run your core web application or database in containers, but utilize serverless functions for ancillary tasks such as processing background jobs, handling webhooks, or generating on-demand reports.

Subscribe to blog updates

Get the best new articles in your inbox. Get the lastest content first.

Recent articles from our magazine

Contact Us

Find out how we can help extend your tech team for sustainable growth.