Contents

Contents

The introduction of ChatGPT and other Large Language Models marked a turning point in Artificial Intelligence (AI) development, leaving the EdTech community both excited and reflective. The question bogging many minds is whether generative AI will redefine personalized education or challenge the very foundation of traditional institutions. But before we dive deeper into that topic, let’s start with some numbers revealing the bigger picture of the global education and training sector.

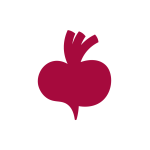

According to recent data by HolonIQ, the global education and training sector is expected to reach $10 trillion by 2030. The increasing number of K12 and post-secondary learners will catalyze this growth, particularly in Asia and Africa. By 2050, the global number of graduates is forecast to increase by 2 billion, driven by higher participation and population growth.

However, even as the knowledge economy and future skillsets demand significant digital transformation, the education sector trails behind, with technology accounting for less than 4% of global expenditure. Though COVID-19 has hastened the push toward digitization, the journey toward comprehensive digital integration in education is still unfolding.

The key factors highlighted to have the most impact on education by 2030 can be summarized as follows:

- Globalization and growth: Emerging markets will drive global growth, becoming appealing destinations for investment and talent as they stabilize and develop.

- Shifts in the global demographics: Given that a net daily increase of 200,000 people persists, education models must scale to accommodate an extra billion individuals by 2030.

- Evolving skills and job landscape: The future of work is highly unpredictable and calls for adaptive strategies for human capital development in the light of automation concerns.

- Technological advancements: Breakthrough technologies like AI, ML, and Blockchains are double-edged swords, presenting both opportunities and challenges. Undoubtedly, they will impact our lives in many ways, including changes in education and learning methodologies.

By 2025, AR/VR and AI will be integrated into education and training, making virtual and simulated training common in both formal education and adult upskilling.

Artificial intelligence in education technology: Key takeaways from this year’s analysis

The latest research statistics about technology in education estimate the market size for AI is predicted to grow to $21.52 billion by 2028 from $1.10 billion in 2021. According to another report by Microsoft, a staggering 99.4% of surveyed educators believe AI will give their institution a competitive edge in the coming years, while 15% consider it a game changer for EdTech.

From a technology standpoint, Vision, Voice, Language, and Analytics are projected to wield the most impact in the education industry, playing a crucial role in developing intelligent EdTech solutions and embedding AI features into new and existing learning products. Thus, it should present countless opportunities for established and new industry players, with a vast consumer base to cater to across various education markets, such as testing and assessment, language learning, upskilling, corporate training, and more.

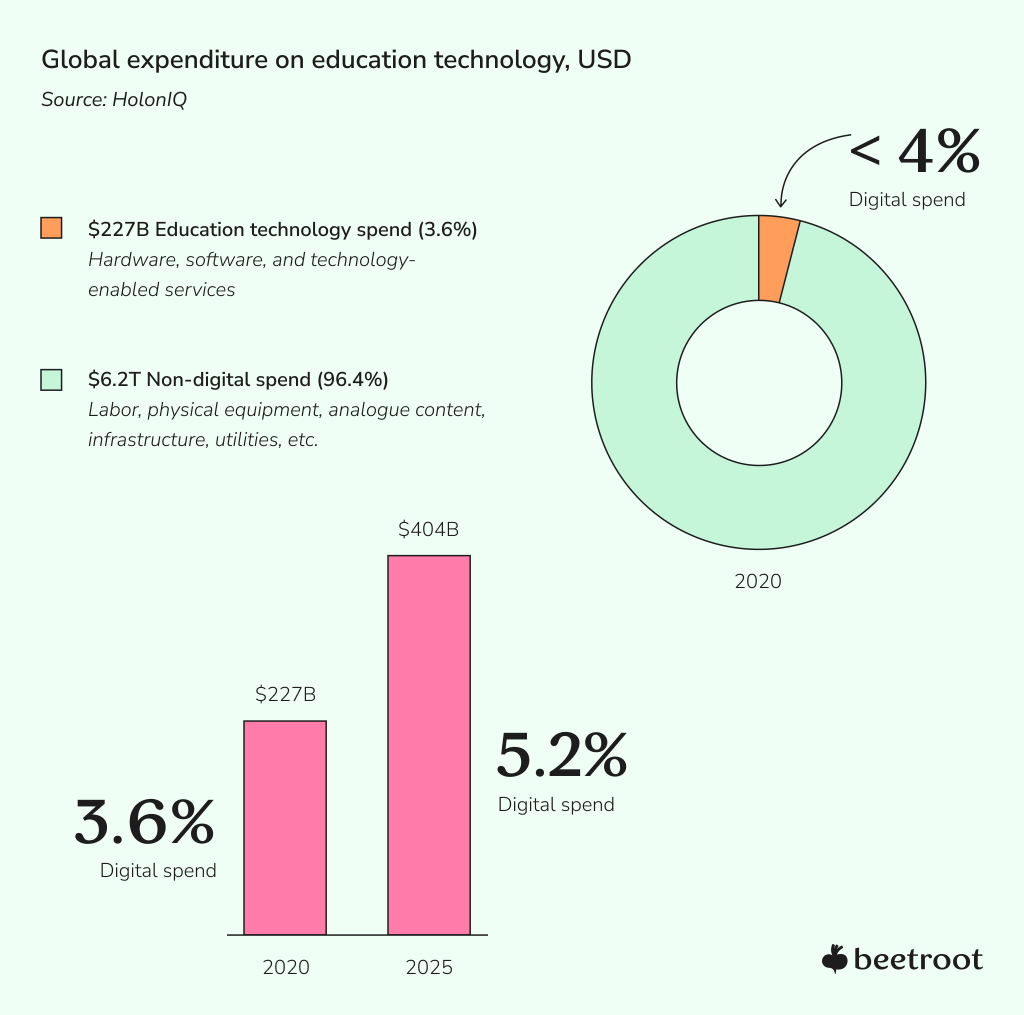

AI adoption in the education sector can positively impact learner outcomes (75% of respondents of the above HolonIQ survey consider this a priority), reduce costs (45%), and disrupt the market (43%). At the same time, talent scarcity, under-resourcing for AI, lack of data literacy and infrastructure, an unclear (or absent) organizational AI strategy, functional silos, and other limitations are the most considerable challenges currently impeding rapid AI integration within organizations.

Generative AI in EdTech: Market dynamics, growth drivers, and challenges

In a nutshell, generative AI in education involves applying ML algorithms and techniques to create new content, such as text, images, and virtual environments based on patterns. Analyzing existing datasets allows these AI systems to generate new materials aligned with specific learning objectives or individual learner needs.

The power of Gen AI allows us to simulate human-like interactions and support students with explanations, assist language learners by generating text and speech samples for practice and self-evaluation, and facilitate the creation of virtual environments to provide extended answers and student support, assist language learners by generating speech and text samples for training and evaluation, and create interactive virtual spaces that encourage active participation and practical learning.

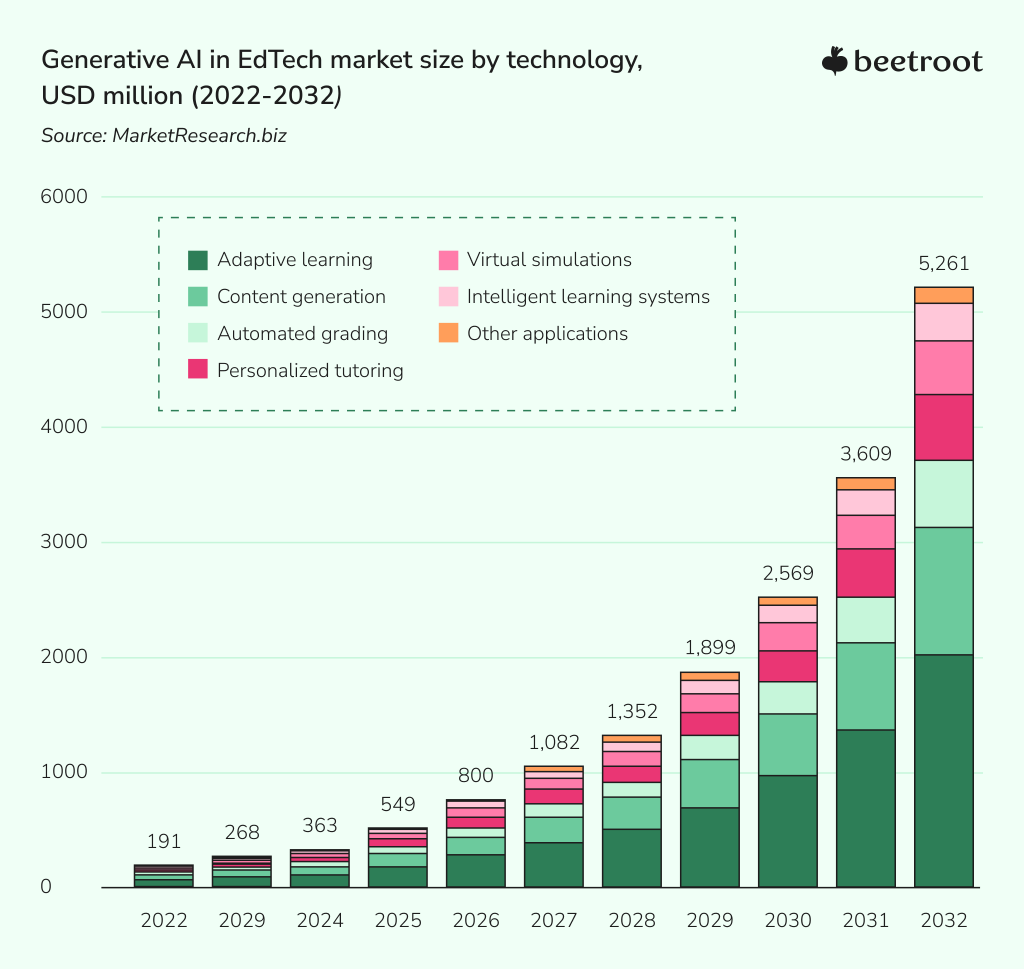

As for the numbers, the Gen AI technology in education research projects its market size to reach $5.3 billion by 2032, growing at a 40.5% CAGR between 2023-2032.

Market drivers. Several key factors influence the Gen AI market progression, notably:

- Rising demand for adaptive learning and personalization;

- The exponential growth of data volumes in education boosts AI’s ability to yield valuable insights;

- Natural language processing and computer vision advancements enrich generative AI, enabling new forms of interactive learning;

- Generative AI’s scalability and cost-efficiency promote its adoption in EdTech.

Restraining factors. To balance some heightened expectations, let’s keep in mind that Gen AI, while promising, isn’t a magic solution. Its adoption is still early and requires further experimentation and refinement to reap tangible, sustained benefits. Moreover, there are recognized challenges and risks associated with AI, including:

- Privacy and ethical concerns related to the gathering and use of student data;

- Reliability and quality of Gen AI models;

- The complexity and resource intensity of Gen AI integration into educational systems;

- The shortage of skilled AI professionals;

- Reluctance from educators and learners to fully adopt AI-driven education.

How do companies respond to the new LLM stack?

November marks a year since the launch of OpenAI’s ChatGPT, a large language model-based chatbot that made waves in the tech world. Companies worldwide increasingly integrate natural language capabilities into their products by leveraging LLM APIs. A recent Sequoia Capital survey highlighted insights from 33 of their affiliated companies on current AI adoption:

AI-based features have become a staple integration for most companies

Auto-complete features have made impressive advancements in fields like coding and data science. Chatbots have also improved across customer support, employee assistance, and entertainment. An AI-first approach is being embraced by many sectors, from visual art, marketing, sales, and contact centers to legal, accounting, productivity, data engineering, and search, marking just the beginning of this transformative trend.

The rising uptake of large language models and related technologies

Companies are integrating LLM APIs for natural language capabilities, with ChatGPT being a popular choice. Vector databases are essential for improving result quality; LLM orchestration, monitoring, cost management, and A/B testing are emerging areas of interest. Some explore complementary generative technologies that blend text and voice.

Companies aim to customize language models to their unique context

Numerous businesses require more than general language models to enable natural language interactions on their data, such as developer documentation, product lists, HR policies, or IT guidelines. Often, they need to customize AI models to fit individual user inputs like personal notes, design layouts, code base, and data metrics. It led to a notable increase in bespoke LLM development in parallel with LLM API integration while other stack components are in earlier maturity stages.

The stacks for LLM APIs and custom model training are gradually merging and becoming more user-friendly

Language model APIs have broadened access to powerful models, empowering developers beyond ML teams. This trend has led to the emergence of developer-focused tools like LangChain that simplify LLM application development by addressing common challenges, from model integration to tool compatibility and preventing vendor lock-in.

LLM APIs were initially considered a replacement for custom training, but more companies are now interested in developing and fine-tuning their models. The future will likely see a hybrid approach that combines the advantages of both methods.

Language models must be trustworthy in output quality, data privacy, and security

Before integrating LLMs widely, many companies prioritize enhancing data privacy, security, and model oversight, especially in regulated sectors like FinTech and healthcare. They desire tools to identify and mitigate model errors, harmful content, and security breaches. Trust in language models will likely increase with evolving policies, fueling broader adoption.

Language model apps will become more multi-modal

As generative models evolve, they promise richer AI applications that blend text, speech/audio, and image/video generation for enhanced user experiences and complex tasks. Companies are innovatively merging generative models, like combining text and speech for better conversations and quick video overdubbing.

AI is still in the early stages of integration

Only 65% of Sequoia’s respondents have adopted AI, mainly using it for basic applications. As more companies embrace AI solutions, fresh challenges will arise, opening doors for entrepreneurs. The infrastructure supporting AI will evolve rapidly in the coming years, and we are excited for the journey ahead with a plethora of demos to explore.

Maximizing positive outcomes of AI development through a balanced approach

When it comes to AI, the journey is as crucial as the destination. Navigating the world of AI requires a balance between human skills and technology. Combining these two elements can lead to organizational growth and drive positive changes in society. A deep understanding of machine learning helps quickly process and understand complex data, essential for identifying valuable opportunities. Such an approach doesn’t just promise value — it delivers it immediately, creating ripples of impact from the onset.

Yet, for AI solutions to be accepted and trusted, they must be clear, ethical, and responsible, especially in education. Given its profound influence on shaping young minds and the sector’s handling of sensitive data, accountable and ethical AI should ensure unbiased, fair, and value-aligned content delivery, maintaining the utmost data privacy while avoiding misinformation and flawed methodologies. Gaining the trust of educators, students, and parents is foundational for the widespread adoption of AI tools, and adhering to ethical standards fosters this trust and ensures regulatory compliance.

Another important consideration is when it comes to implementing AI, partnerships are key. It’s not just about developing solutions but ensuring they are integrated into the organization and have a lasting impact. Every AI project is unique, so a flexible approach is necessary to meet specific requirements. Diverse teams combining various skills are best poised to address these unique challenges effectively.

At Beetroot, we fundamentally view technology as an enabler, a transformative tool that can genuinely improve lives. Our commitment is to guide you in leveraging AI, not merely as a solution but as a powerful catalyst for broader positive change. With us by your side, your AI journey can maximize outcomes, upholding the highest efficiency and ethical standards.

Subscribe to blog updates

Get the best new articles in your inbox. Get the lastest content first.

Recent articles from our magazine

Contact Us

Find out how we can help extend your tech team for sustainable growth.