Scaling AI for Success: Considerations on AI Advancement in EdTech

Contents

Contents

As we continue to explore the transformative role of AI in the education technology space, it’s evident that artificial intelligence is driving innovation at an unprecedented pace. AI-powered tools and applications are being implemented to personalize learning, provide real-time feedback, and automate various education-related tasks, ensuring that EdTech products gradually evolve to understand individual learning needs better, operate efficiently, and handle a growing number of users.

According to Deloitte insights, most companies aspire to be at the forefront of AI in their industry or market. However, many find themselves struggling to reach this goal because their current AI maturity needs substantial improvement. As of the beginning of 2023, just 15% of organizations regarded their AI advancement level as mature.

The role of data in AI advancement

Quality data forms the bedrock of AI initiatives and can help businesses of all sizes establish benchmarks and goals for continued growth. It’s impressive how well generative AI models can understand and create text. However, their performance largely depends on the data used for training. That’s why having high-quality data is crucial for these systems to operate efficiently and produce dependable outcomes.

- The quality of input data (high-grade language samples) is the cornerstone of effective learning in language models, especially for fine-tuning pre-trained models, as they impact the model’s ability to comprehend and generate language.

- Low-quality training datasets used to train language models bear potential risks beyond poor outputs. When trained on biased data or provided with inaccurate information, these models may inadvertently perpetuate embedded biases and generate misleading or even harmful results.

- Data governance involves verifying data accuracy and currency, eliminating bias, implementing inclusive guidelines in content language, maintaining high writing quality (grammar and spelling), and sourcing from diverse data sets to maintain language model quality and promote ethical and responsible AI use.

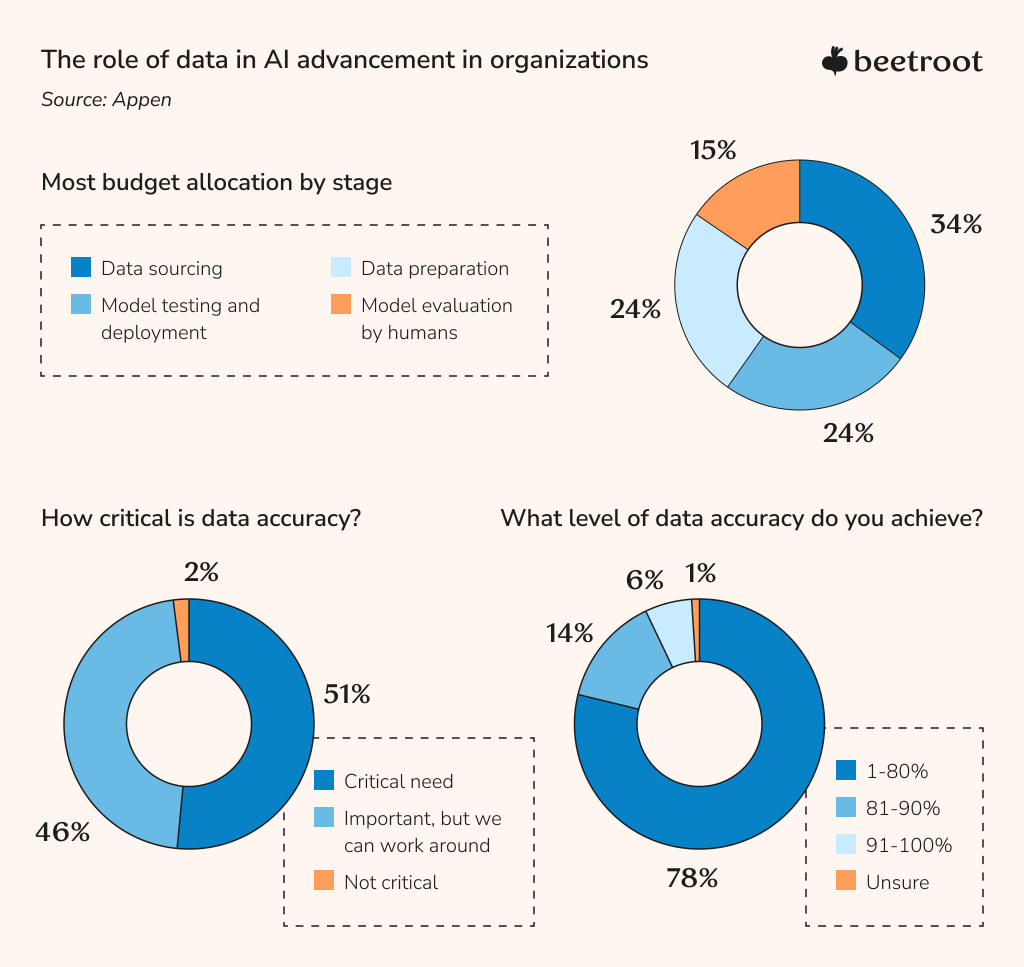

In the past, only large corporations had access to analytics tools, but now, they are increasingly accessible for businesses of all sizes. Despite this, sourcing quality data continues to be the major bottleneck for many companies. According to Appen’s recent State of AI and Machine Learning report, data sourcing eats up 34% of a typical AI budget. At the same time, over half of Appen’s survey respondents believe that data accuracy is critical for their AI applications, while another 46% consider it important but manageable. Unfortunately, only a tiny percentage (6%) of businesses have achieved 90% and higher accuracy in their data.

How to optimize AI systems for scalability

Scalability is vital for the long-term success of any AI project, especially in impactful domains, but achieving it is complex and challenging. AI-driven features should scale seamlessly and accommodate larger information volumes for personalized learning recommendations and assessments. This process requires meticulous planning across system elements like data, architecture, and algorithms. Even seasoned tech organizations may struggle to scale AI to production, so careful attention to best scaling practices is the key to successful AI implementation.

Data quantity and preparation

It is essential to focus on both the quality and quantity of data. Data quality involves accuracy and relevance, while data quantity considers size and diversity. A robust dataset is crucial for the best model training, and outcomes become more specific when the data is more contextually appropriate. Therefore, scalable data management and validation processes are critical for a system to be stable and future-proof.

In our experience, transfer learning is the bridge between data quantity and quality in the complex landscape of AI. It allows harnessing the vastness of internet segments, academic articles, and open-source code and then fine-tuning with a focused, contextualized dataset. A robust data infrastructure is the backbone that meets the data hunger of different AI techniques and allows scaling. Data scientists spend 80% of their time on data management and just 20% on the model, underscoring the need to supply AI not just with volume but with value.

Architecture selection and design

Before starting the design process, defining your scalability goals and metrics is crucial to ensure that your system architecture meets your business requirements and expectations. Apply design principles such as modularity, loose coupling, high cohesion, abstraction, or layering to optimize performance, efficiency, and maintainability. Select the appropriate technologies, implement scalability patterns, and regularly test and monitor performance to guarantee that you reach your scalability targets. This comprehensive approach will help you achieve success in your scalability efforts.

Infrastructure optimization

AI systems optimization for scalability requires fine-tuning the supporting infrastructure, which includes hardware, software, and network resources. These elements impact speed, reliability, security, and cost. Ensuring alignment with system goals and leveraging cloud, edge computing, and containerization is essential for optimal performance.

Vector databases are a crucial component of modern AI systems that often goes unnoticed. This type of storage enables the search for vectors based on measurements such as cosine similarity. Developers can utilize these databases to develop conversational applications and small-scale search engines, providing even more robust capabilities when paired with an embedding model.

AI algorithm selection and refinement

The AI system’s decision-making, learning, and logic depend on the underlying algorithms, which determine its effectiveness, flexibility, and scalability. Therefore, it is vital to choose and improve algorithms that can produce the desired outcomes. Your team can employ reinforcement, transfer, and federated learning techniques to optimize the system’s scalability.

Testing and monitoring system performance

Performance testing and continuous monitoring are the number one priority to ensure your AI system is scalable and resilient. Load testing and understanding usage patterns can help identify optimization opportunities. These activities validate functionality, accuracy, and reliability while identifying improvement areas. Always keep an eye on your system’s performance and make it a regular practice to consistently review and fine-tune your architecture, algorithms, and infrastructure to meet evolving demands. Integrating metrics and tools at different development stages can help accurately track and enhance the system’s scalability.

Usability and user experience

Optimizing AI systems for scalability requires focusing on the user experience besides business objectives. A clear vision guides scalability preparations, from targeting specific markets to expanding to other regions or internal user groups. To ensure a positive user experience, take your time to understand and meet user needs, consistently gather and analyze feedback, and improve usability, accessibility, and personalization. Additionally, diverse training data and compliance with local regulations should become a priority when scaling a conversational AI across several locations.

How to optimize AI for your business context

Yes, AI models offer significant benefits, such as solving complex problems, automating tasks, and making predictions. But how can you ensure that your AI models perform as you planned and are easy to understand for others? Below are some tips and best practices to help you navigate these challenges.

Define your goals and metrics

It’s essential to have a clear vision of your goals and challenges before implementing AI solutions. By determining whether you want to increase productivity, reduce costs, improve quality, or boost innovation, you can align your AI strategy with your business objectives and prioritize the most relevant and impactful use cases. Additionally, you should identify any bottlenecks, gaps, or risks in your current processes or systems.

To succeed in AI development, start with a well-defined vision and align it meticulously with your business goals. You may use the ACT framework — Alignment for syncing AI goals with business aims, Clarity in your code and architecture, and Transparency in decision-making — to create a trustworthy and efficient model. Integrate automation to scale your operations and establish clear milestones to keep your team focused on data-driven results.

Select the right AI tools and methods

Depending on your capabilities, you can develop apps, use pre-existing services, or partner with AI development teams. Selecting suitable tools that perfectly align with your business requirements is crucial. Choosing the proper methods and algorithms, such as regression, classification, clustering, or deep learning, is critical in achieving the desired results.

When developing AI, it’s important to prioritize diverse and representative data while balancing model accuracy and interpretability. As technology leaders, we should regularly consult with multi-disciplinary teams and maintain transparency in our processes to ensure our AI solutions remain effective and ethically accountable.

Train, test, and validate your AI models

After selecting your AI tools and platforms, it’s important to train and test your AI models to guarantee they perform effectively and produce the desired results. We suggest viewing the AI model’s evaluation as an opportunity for ongoing improvement, not a one-off task. With gradual changes in data distributions, regular re-evaluation with new data and continuous monitoring are needed to maintain performance and reliability. The same applies to incorporating user and expert input to ensure the model aligns with human values.

Testing and validation will help you identify further areas of improvement. Use cross-validation and hold-out validation to evaluate accuracy and interpretability. Feature importance and partial dependence plots provide actionable insights for real-world adaptability.

Deploy, monitor, and update your AI solutions

Since AI systems are dynamic, governance must become a permanent task. To function efficiently and as planned, AI features require constant maintenance to blend seamlessly with your current operations and tools to address user pain points. Successful AI implementation requires good management practices, governance frameworks, and seamless UI/UX experiences. Also, never underestimate the power of user acceptance: training people to use your product and gathering feedback for further improvements are crucial to its success and the value it brings to the end user.

Engage and educate your stakeholders

Keep your internal and external stakeholders informed and updated about your AI projects and advantages to maximize trust and satisfaction. That involves communicating clearly and transparently with your employees, customers, partners, and regulators about your use of AI, what outcomes you expect, and what ethical guidelines you follow. Consider providing extra training and support to help these groups interact effectively with your AI tools.

Remember, the key to success with AI is finding the proper balance between human expertise and machine intelligence; investing in education and expertise will help you get there. By embracing new approaches and taking advantage of the latest technologies, you can innovate, save money, and improve your performance for years to come. And if you’re looking for a strategic development partner to accompany you on your AI journey, Beetroot is here to help — drop us a line, and our experts will quickly contact you to connect.

Subscribe to blog updates

Get the best new articles in your inbox. Get the lastest content first.

Recent articles from our magazine

Contact Us

Find out how we can help extend your tech team for sustainable growth.